ML A/B Test Sample Size Calculator

Calculate minimum sample size needed for your ML A/B test to detect meaningful business impact with statistical confidence. Based on your article's guidance: 80% power, p < 0.05 significance level.

Your current conversion rate without ML personalization (e.g., 5% for e-commerce)

The smallest meaningful change you want to detect (article suggests 3% for baseline 5%)

Based on 80% statistical power, p < 0.05 significance level.

Why Your ML Model Is Losing Money (Even If It Looks Perfect)

You built a machine learning model that predicts which users will buy your product. It scores 94% accuracy on your test data. Your team celebrates. You push it to production. A week later, revenue drops 3%. What happened?

This isn’t a rare mistake. It’s the norm. Statsig’s 2023 analysis found that 68% of companies see a gap between how well their ML models perform in development and how they actually affect business outcomes. A model with better accuracy can still make worse decisions in the real world. Why? Because accuracy isn’t revenue. F1 score isn’t retention. Precision doesn’t pay the bills.

The truth is, most ML teams optimize for the wrong things. They tune hyperparameters, tweak loss functions, and chase higher validation scores-while ignoring whether their model actually helps the business. That’s where A/B testing for ML decisions changes everything. It’s not about proving your model works. It’s about proving it makes money.

How ML A/B Testing Is Different From Regular A/B Testing

Traditional A/B testing compares two static versions: blue button vs. green button. You split traffic 50/50, wait for statistical significance, and pick the winner. Simple.

ML A/B testing is messier. You’re not testing a button color-you’re testing a dynamic, personalized decision engine. One user sees a recommendation based on their past behavior. Another sees something entirely different based on their location, device, and browsing history. The model adapts in real time. That’s powerful-but it’s also harder to measure.

Here’s the key difference:

- Regular A/B test: One variant wins for everyone.

- ML A/B test: The model learns who gets what, and the winning strategy isn’t a single version-it’s the algorithm itself.

For example, a travel site tested two approaches: a static ranking of hotels (control) vs. an ML model that personalized rankings based on user history (challenger). The ML model didn’t just improve clicks-it increased completed bookings by 7.3%. Why? Because it didn’t just show popular hotels. It showed the ones users were actually likely to book based on their past behavior.

But here’s the catch: if you only track clicks, you might think the ML model is a success. In reality, one fintech company optimized for loan approval rates and saw a 12% increase in applications. But loan defaults jumped 0.9%. They didn’t measure risk-they measured volume. Big mistake.

What You Must Measure (Beyond Clicks and Conversions)

Most teams stop at the obvious metrics: conversion rate, revenue per user, click-through rate. But ML models affect behavior over time. If you only look at day one, you’ll miss the real story.

Here’s what you need to track, in order of importance:

- Primary business metric: What’s your goal? More sales? Higher retention? Lower support calls? Pick one. Don’t try to optimize for everything.

- Guardrail metrics: These are the things you don’t want to hurt. For e-commerce: average order value, return rate. For SaaS: churn, support tickets. For fintech: default rates, fraud incidents.

- Long-term value: Customer lifetime value (LTV) matters more than first-day revenue. A model that boosts initial purchases but kills retention is a net loss.

- Engagement quality: Are users spending more time? Are they coming back? Or are they clicking once and leaving? Clicks aren’t engagement.

One company running ML-powered product recommendations noticed a 15% increase in clicks-but a 4% drop in purchases. Turns out, the model was pushing low-quality, high-margin items that looked appealing but didn’t meet user needs. They fixed it by adding a guardrail: only recommend items with a 4+ star rating and a return rate under 10%.

Without guardrails, you’re flying blind. With them, you protect your business while experimenting.

How to Set Up a Real ML A/B Test (Step by Step)

Here’s how to run a valid ML A/B test without wasting months or breaking your product.

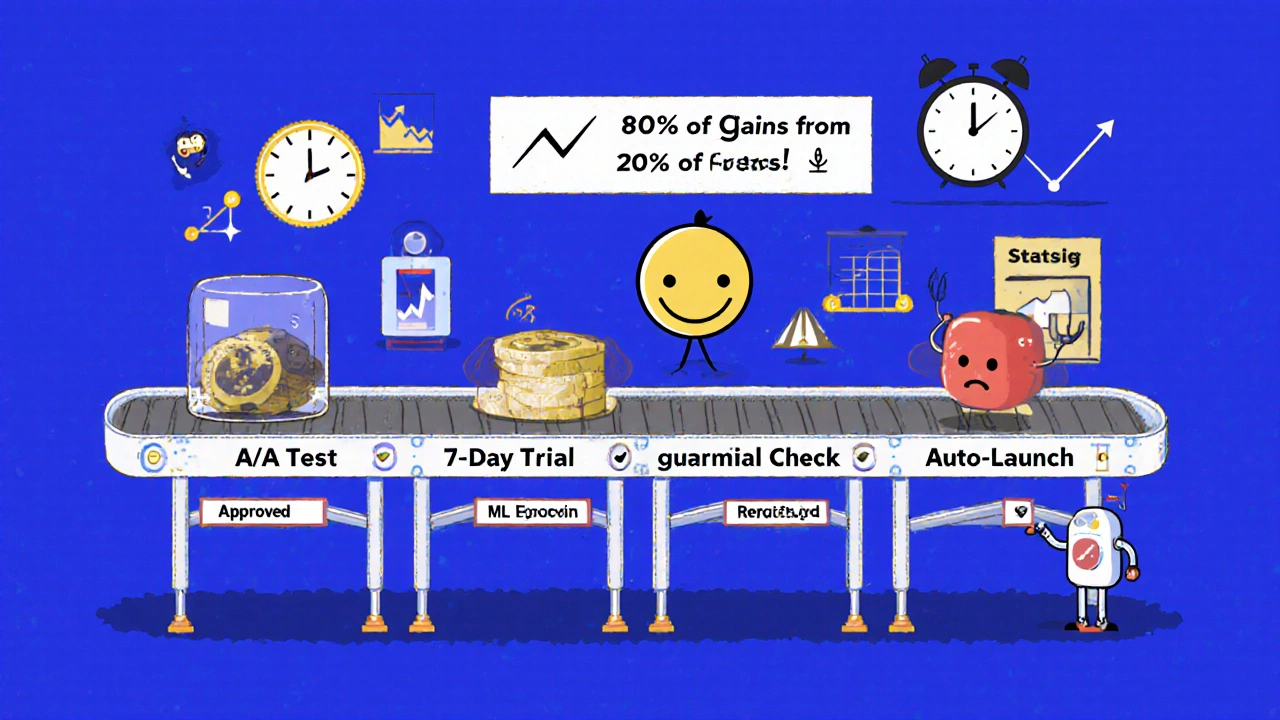

- Start with an A/A test. Before you test your ML model, run a test where both variants use the same model. This checks if your tracking, traffic splitting, and analytics are working. If the results aren’t statistically equal, your setup is broken. 92% of experts say this is non-negotiable.

- Split traffic randomly by user ID. Never split by session or device. Use a stable identifier like user ID or anonymous user key. This ensures the same user always sees the same version. Otherwise, you’ll mess up retention and LTV measurements.

- Use a 50/50 split (at first). Don’t start with 90/10. You need enough data to detect real differences. For a baseline conversion rate of 5%, you need at least 10,000 users per variant to detect a 3% change with 80% power.

- Run it for at least 7-14 days. Short tests (under a week) get fooled by novelty effects. Users react to change-even bad change-by clicking more at first. Wait for behavior to settle.

- Use statistical significance, not gut feeling. Set your threshold at p < 0.05 and power at 80%. Don’t peek at results daily. Use automated alerts. Dr. Andrew Gelman found that 30% of "significant" results in industry tests are false positives because teams check too often.

- Automate the decision. Don’t manually interpret results. Use tools like Statsig or Seldon that auto-calculate significance, track guardrails, and stop tests when results are clear.

One team at a digital media company tried to save time by running tests for only 3 days. They thought their ML model increased engagement. Turns out, it was a novelty effect. After two weeks, engagement dropped below baseline. They lost 11% of returning users.

The Tools That Actually Work (And Which Ones to Avoid)

You don’t need to build this from scratch. But not all tools are created equal.

Best for most teams: Statsig, Seldon, Wallaroo

- They integrate directly with your ML pipeline.

- They handle traffic splitting, statistical analysis, and guardrail monitoring automatically.

- They have SDKs for web, mobile, and backend systems.

- Statsig’s 2023 user reviews show a 4.7/5 rating, with 42 out of 58 users praising its "intuitive statistical analysis."

For advanced teams with data science resources: Google’s Vowpal Wabbit, Apache MXNet

- Open-source. Free.

- Require heavy engineering to set up tracking and analysis.

- Only worth it if you have a team of 5+ data engineers.

- Lower satisfaction: G2 ratings average 4.1/5 vs. 4.8/5 for Statsig.

Avoid: Manual Excel spreadsheets, basic Google Optimize, or custom SQL queries.

One startup tried to run ML A/B tests using Google Analytics and manual CSV exports. It took 3 weeks to get results. They missed a 5% revenue lift because they didn’t track LTV. They ended up rolling back a winning model.

Common Mistakes (And How to Avoid Them)

Here’s what goes wrong-and how to fix it.

- Mistake: Optimizing for offline metrics (accuracy, F1 score). Fix: Always measure online business impact. Ron Kohavi’s research at Microsoft showed a model with 0.5% lower accuracy generated 3.2% more revenue.

- Mistake: Testing too few variants. Fix: Run more experiments. Wharton found companies running 50+ tests a year see 8.2% higher conversion rates than those running fewer than 10.

- Mistake: Ignoring novelty effects. Fix: Run tests for at least 10-14 days. Watch for dips after the first week.

- Mistake: No guardrails. Fix: Define 3-5 metrics you won’t let drop. If any of them move negatively, pause the test.

- Mistake: Testing only one model. Fix: Compare your ML model against a simple rule-based system (e.g., "show most popular items"). If your ML model doesn’t beat the baseline, why are you using it?

One team optimized their recommendation engine for click-through rate. Clicks went up 20%. Sales dropped 1.8%. They fixed it by adding a new metric: "conversion rate from recommended items." That’s the only metric that mattered.

The Future: Continuous Experimentation

The best companies aren’t running monthly A/B tests. They’re running them daily.

"Continuous experimentation" means every model update triggers a new test automatically. If you retrain your model, it doesn’t go live until it’s proven better in a live test. This isn’t science fiction-it’s happening now.

O’Reilly’s September 2023 survey found 78% of data science leaders plan to implement this by 2025. Statsig’s real-time Bayesian analysis (launched August 2023) cuts required sample sizes by up to 35%, making daily tests feasible.

Wharton’s research shows a surprising insight: running 10 small tests with 2,000 users each is more effective than running 2 large tests with 10,000 users. More experiments = more chances to find real wins. The Pareto principle holds: 80% of gains come from 20% of tests-but you won’t find those 20% unless you run enough tests.

This isn’t about perfection. It’s about progress. The goal isn’t to build the perfect model. It’s to build the model that makes the most money-safely, reliably, and continuously.

Final Thought: Stop Optimizing Models. Start Optimizing Outcomes.

ML isn’t magic. It’s a tool. And like any tool, it’s only valuable if it solves a real problem.

Stop asking: "Is my model accurate?"

Start asking: "Did my model increase revenue? Reduce churn? Improve customer satisfaction?"

If you can’t answer those questions with data from a live A/B test, you’re not running ML-you’re running guesswork.

The companies winning with AI aren’t the ones with the fanciest models. They’re the ones who test everything, measure what matters, and never let a model go live without proof it helps the business.

Can I use A/B testing for any machine learning model?

Yes-but only if the model affects user behavior in a measurable way. A/B testing works for recommendation engines, personalization systems, pricing models, fraud detection, and search ranking. It doesn’t make sense for models that run in the background without user-facing impact, like internal data cleaning or predictive maintenance.

How long should an ML A/B test run?

At least 7-14 days, depending on your business cycle. For e-commerce, run it through a full week to capture weekend behavior. For SaaS, wait for monthly billing cycles to complete. Never stop a test before it reaches statistical significance or before you’ve seen at least one full user cycle.

What if my ML model performs worse in the test?

That’s not failure-that’s progress. Many models fail in live tests, and that’s why you test. If your model reduces revenue, increases churn, or hurts a guardrail metric, roll it back. You just saved your business from a costly mistake. The goal isn’t to make every model better-it’s to make sure only the ones that help go live.

Do I need a data scientist to run ML A/B tests?

You need someone who understands statistics and business metrics-not necessarily a PhD. Product managers, growth leads, and analysts can run tests successfully with the right tools. Platforms like Statsig and Seldon handle the math. Your job is to define what success looks like and interpret the results. Training takes 3-5 sessions for non-technical team members.

Can I use ML A/B testing in regulated industries like finance or healthcare?

Yes, but with extra care. GDPR and the EU AI Act require transparency and audit trails. You must document every test, track who made decisions, and prove your model doesn’t discriminate. Use tools that log all experiment variations and user assignments. Avoid black-box models if explainability is required by law. Start with low-risk use cases like email subject lines before moving to loan approvals or treatment recommendations.

How do I know if my A/B test results are real and not just noise?

Look for statistical significance (p < 0.05) and sufficient power (80% or higher). Check if the effect holds across subgroups (e.g., new vs. returning users). If the result disappears after 14 days, it’s likely a novelty effect. Always run an A/A test first to confirm your system isn’t biased. And never trust a result from a test with fewer than 5,000 users per variant.

Next Steps: Start Small, Think Big

Don’t wait for the perfect model or the perfect tool. Pick one decision your team makes with ML-like product recommendations or search ranking-and test it. Run a 7-day A/B test with 50/50 traffic. Track revenue and one guardrail metric. If it works, scale it. If it doesn’t, learn why and try again.

The companies that win with AI aren’t the ones with the best algorithms. They’re the ones who test relentlessly, measure honestly, and never confuse model performance with business impact.

Astha Mishra

October 31, 2025 AT 05:30It’s fascinating how we’ve convinced ourselves that accuracy is the holy grail, when in reality, it’s just a proxy-a shadow on the wall of the real fire. We optimize for numbers on a screen while the business bleeds out in quiet, unmeasured ways: customers who return once and never come back, orders that are refunded because the recommendation felt ‘off,’ support tickets piling up because the model pushed something confusing or inappropriate. The model doesn’t care about trust. It doesn’t care about loyalty. It only cares about minimizing loss function. But we do. And that’s why we must measure what matters-not what’s easy to measure. I’ve seen teams spend months tuning a model to hit 95% precision, only to realize that their churn rate had spiked because users felt manipulated. The real win isn’t in the algorithm. It’s in the relationship you build with your customers through every decision you make. Let’s stop worshiping metrics and start honoring outcomes.

Kenny McMiller

October 31, 2025 AT 15:50Bro, this is the exact reason why ML engineering is broken. Everyone’s chasing F1 scores like it’s a TikTok challenge, but no one’s asking if the model actually moves the needle on EBITDA. You need to think in terms of causal inference, not just correlation. ML A/B tests aren’t just about traffic splits-they’re about counterfactuals. If you’re not using doubly robust estimators or propensity score weighting, you’re just guessing. And don’t even get me started on novelty effects-those first-week spikes are pure placebo. Real signal takes time. Use Bayesian methods. Stop using p-values like they’re oracle bones. Statsig’s Bayesian engine is the only thing keeping me sane these days. Also, guardrails? Obviously. But define them *before* you ship, not after you’ve tanked retention. #MLops #causalitynotcorrelation

Dave McPherson

November 1, 2025 AT 10:48Ugh. Another blog post pretending ML is some sacred ritual instead of just glorified curve-fitting with a side of corporate buzzwords. ‘Guardrail metrics’? ‘Long-term value’? ‘Continuous experimentation’? Please. You’re not optimizing outcomes-you’re optimizing your LinkedIn post. The truth? 90% of these ‘ML models’ are just logistic regression with a fancy wrapper and a $200k SaaS subscription. And don’t even mention ‘Vowpal Wabbit’ like it’s some arcane artifact-half the people using it can’t explain what a feature hash is. Meanwhile, the real winners? The ones who just A/B test button colors and call it a day. At least they know what they’re measuring. You? You’re just feeding the AI hype industrial complex. Go back to Excel. You’ll be happier. And your CFO will thank you.

RAHUL KUSHWAHA

November 1, 2025 AT 13:52